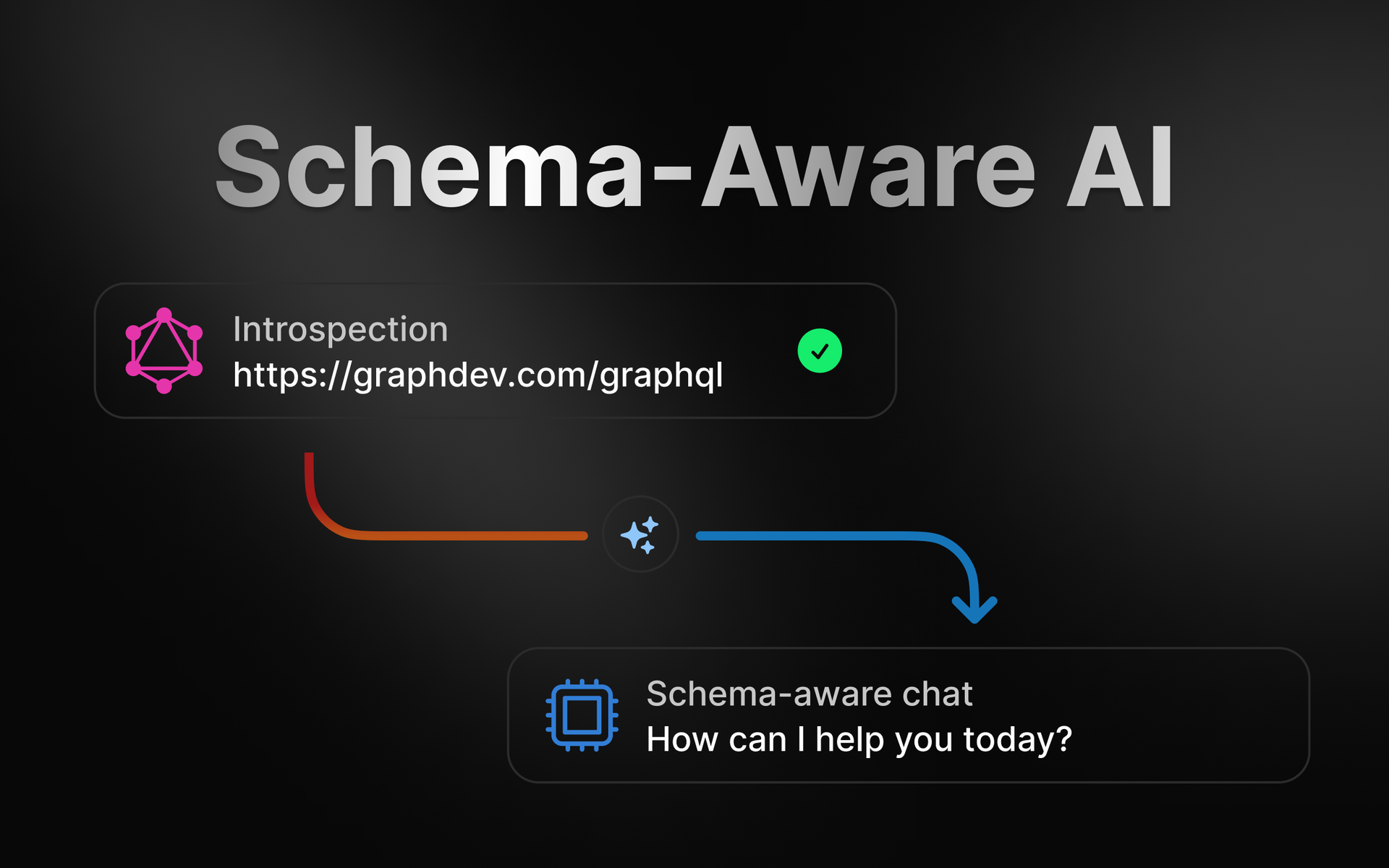

Schema-aware chat is the ability to load a GraphQL schema into the context of an LLM. This may seem as simple as just providing your schema as a sting to the API, but given the size of schemas, and limited context windows, this isn't always straightforward.

When building this feature into GraphDev I had to consider a few approaches to make this work for even large schemas.

Choosing a modal

When choosing which model to use we have a few things to consider.

- Response quality

- Response time

- Context window size

- Cost

I tried two models for this feature. OpenAI's GPT-4 and Anthropic's Claude 3.5 Sonnet.

Both gave similar results in terms of quality, but Anthropic's model was faster and had a much larger context window.

Here are the context window sizes for each model:

Claude 3.5 Sonnet 100,000 tokens.

GPT-4 32,000 tokens.

These context window sizes decide how much text the model can handle at once, so a larger window is a big win for loading large schemas.

For this reason the obvious choice was to use Anthropic's Claude 3.5 Sonnet.

The basics of schema-aware chat

The Antropic API and those of other LLM vendors are stateless. This means that they don't have any memory of previous interactions. This is a good thing, as it means that the model can be used in a wide variety of contexts.

But it also means we need to maintain this state ourselves when builing a chat interface.

This means storing the conversion either in memory or in a database. Regardless, each time we make a request to the model we must provide the entire history.

const anthropic = new Anthropic({

apiKey: 'YOUR_API_KEY',

})

const messages = [

{ role: 'user', 'Which query do I need to find the current user?' },

{ role: 'assistant', 'You can access the `user` query to do this' },

{ role: 'user', 'Write a `user` query and include address details' },

]

const response = await anthropic.messages.create({

model: "claude-3-5-sonnet-20240620",

messages: conversationHistory,

});

// The model will respond with the next message. We should add this

// to the conversation history to include in the next request.

Now we know about maintaining state, we can also load in the schema in the same way. This can either be as part of the conversation history (messages array), or as a separate "system" message.

const system = JSON.stringif({

instructions: `You are an AI assistant helping with GraphQL queries. Use the provide graphql_schema`,

graphql_schema: 'type Query { user: User } type User { name: String }',

})

const response = await anthropic.messages.create({

model: 'claude-3-5-sonnet-20240620',

messages: conversationHistory,

system,

})

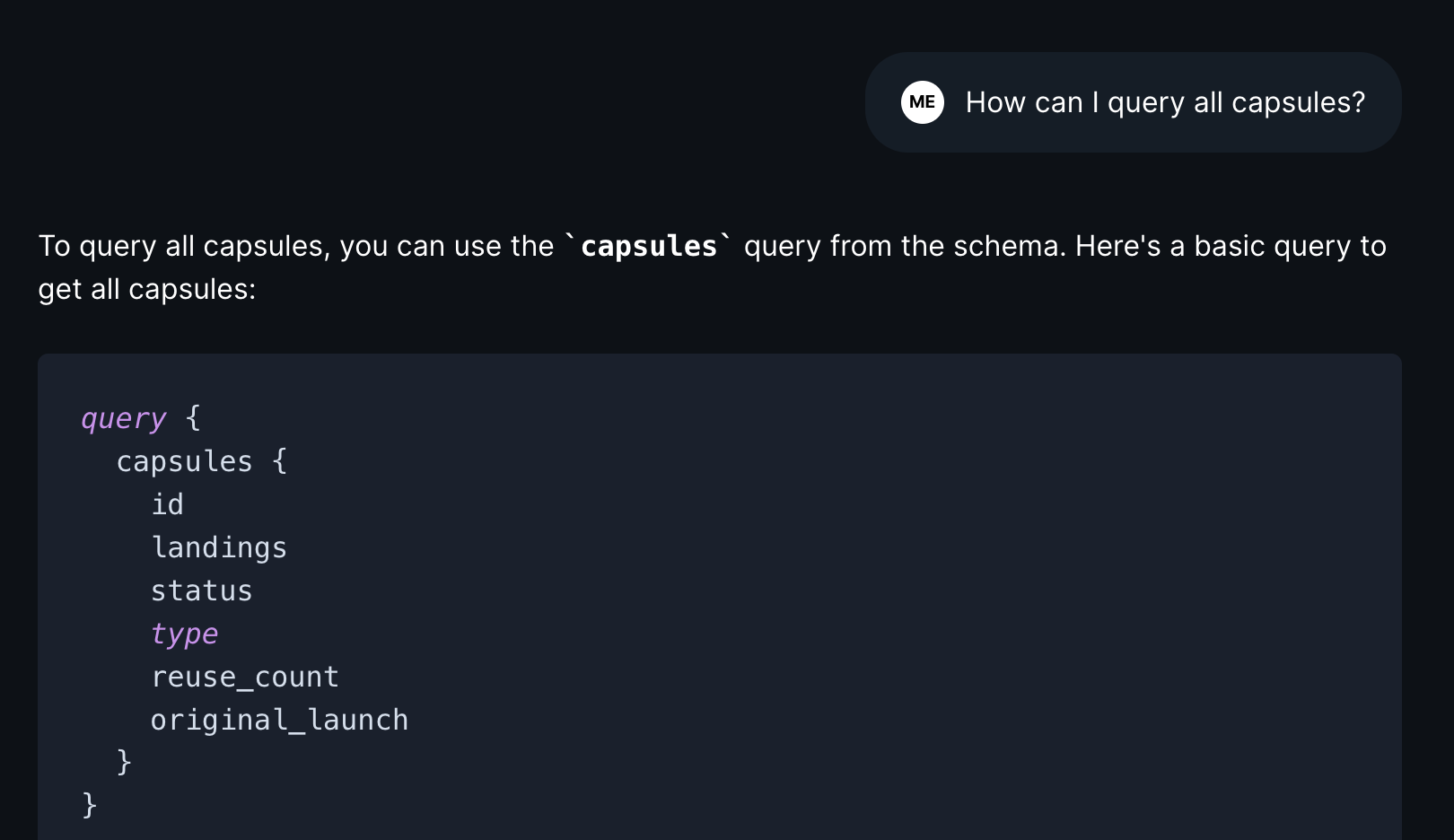

With this in place we can now ask questions directly related to the schema and the API will be able to provide more accurate responses.

Handling large schemas

With the above example you'll quickly hit a wall with medium to large schemas. The context window size of the model will be exceeded and you'll get an error. Event with 100,000 tokens.

The first easy win is to compress our schema. This can be done by changing it's format and removing unnecessary whitespace and comments.

For example let's compare the schema as a JSON object to a compressed string.

{

"data": {

"__schema": {

"queryType": {

"name": "Query"

},

"types": [

{

"kind": "OBJECT",

"name": "Query",

"fields": [

{

"name": "user",

"type": {

"kind": "OBJECT",

"name": "User"

}

}

]

},

{

"kind": "OBJECT",

"name": "User",

"fields": [

{

"name": "name",

"type": {

"kind": "SCALAR",

"name": "String"

}

}

]

}

]

}

}

}

Compressed:

type Query { user: User } type User { name: String }

Both of these represent the same schema, both contain the same information. But the compressed version is much smaller.

JSON - 261 characters

Compressed - 52 characters

In this small example the compressed version is more than 5x smaller. This will make a big difference when loading the schema into the model.

To compress our schema we can run it through a simple function:

import { buildClientSchema, getIntrospectionQuery } from 'graphql'

// Introspect the schema

// or provide through another method

const introspectionResult = await fetch('/graphql', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ query: getIntrospectionQuery() }),

}).then((res) => res.json())

// Build the schema for client use

const schema = buildClientSchema(introspectionResult.data)

// Compress the schema

const compressedSchema = stripIgnoredCharacters(printSchema(schema))

Validating results

The beauty of having the schema is we can also validate the responses from the model. This can be done by running the response through a GraphQL parser and checking for errors, or executing the query against a mock-server.

We do this on GraphDev and also include intellisense when typing queries into the query editor.

Taking it further

What happens when we work with even larger schemas? Schemas that are too large to fit into the context window even when compresses?

For these scenarios we can work with a variation of the sliding window technique. This involves breaking the schema into smaller parts and loading them in one at a time.

For example we could ask a question like:

"How can I search for all orders placed by a user?"

On the backend we could then turn this into a more complex conversation:

You are an AI assistant helping with GraphQL queries. The schema is too large to provide in one go so i'll provide it in parts.

Here is the next part of the schema:

"type Order { id: ID user: User }"

Here is your response so far:

"You can query using the searchOrders query however I do not have schema for this type yet."

The question is:

"How can I search for all orders placed by a user?"

Following this method we would only need to load smaller chunks of the schema, allowing the model to build up a picture over time based on it's previous responses. With this method we could effectively load schemas of any size.

See it in action

Schema-aware chat is now available at GraphDev. You can try it out by asking questions related to your schema. The model will be able to provide more accurate responses based on the schema you provide.

Thanks for reading!