What is the N+1 problem?

The N+1 problem is a common performance issue which occurs when building GraphQL APIs. You'll likely run into this issue when fetching related data through a nested resolver.

For example, let's say you have a Post type and an Author type. When you query for a list of posts and include the author field, you'll end up making N+1 queries to the database.

N being the number of authors requested and 1 being the initial query to fetch the posts. So if we returned 10 posts, we would make 11 queries to the database.

That's a lot of round trips to the database, and it can slow down your application significantly as the number of records grows, or the complexity of the queries increase.

Let's take a look at the following query and it's resolvers:

query {

posts {

title

author {

name

}

}

}

const resolvers = {

Query: {

posts: async () => {

return await Post.find()

},

},

Post: {

author: async (post) => {

return await Author.findById(post.authorId)

},

},

}

With the above query returning 10 posts, let's look at the SQL we would generate:

SELECT * FROM posts;

SELECT * FROM authors WHERE id = 1;

SELECT * FROM authors WHERE id = 2;

SELECT * FROM authors WHERE id = 3;

...

SELECT * FROM authors WHERE id = 10;

This issue is particularly pervasive because it throws no errors, causes no lint warnings and will easily make it into production without being noticed.

How to solve the N+1 problem with dataloaders

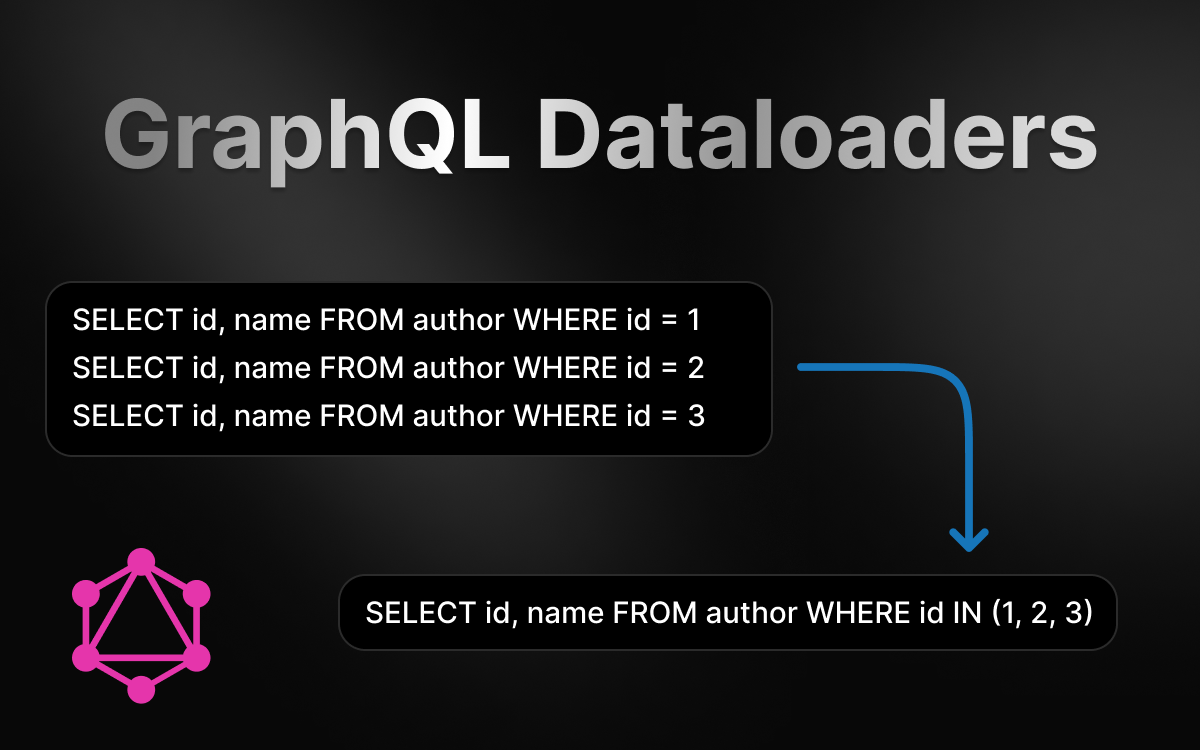

The answer is dataloaders. Dataloaders are a pattern that allows you to batch and cache queries to your database. This means that instead of making N+1 queries, you can batch all the author IDs and fetch them in a single query.

If you're using node you can use the dataloader package to implement dataloaders in your GraphQL server. But regardless of your stack, the concept remains the same.

npm install dataloader

The dataloader library provides a new class Dataloader which we can use to write a dataloader for each query we want to batch. In our case let's look at the Author type.

First we write a dataloader for the Author type:

import DataLoader from 'dataloader'

const authorLoader = new DataLoader(async (keys) => {

// Fetch all authors with the given keys

const authors = await Author.find({ _id: { $in: keys } })

// Map the authors back to the keys

return keys.map((key) => {

return authors.find((author) => author.id === key)

})

})

And then you can use the authorLoader in your resolver:

const resolvers = {

Query: {

posts: async () => {

return await Post.find()

},

},

Post: {

author: async (post) => {

return await context.authorLoader.load(post.authorId)

},

},

}

If we called .load with 10 author IDs, the dataloader would receive all unique ids. Allowing us to send a single query.

Each time our nested resolver is called, we use the load method of our dataloader.

context.authorLoader.load(1)

context.authorLoader.load(2)

context.authorLoader.load(3)

...

context.authorLoader.load(10)

This would mean the dataloader would receive all unique author IDs and we could fetch all authors in a single query.

SELECT * FROM authors WHERE id IN (1, 2, 3, ..., 10);

This means we are only sending two queries to the database, one for the posts and one for the authors. This is a huge improvement over the N+1 problem.

SELECT * FROM posts;

SELECT * FROM authors WHERE id IN (1, 2, 3, ..., 10);

Data leakage

Because the dataloader is caching keys and results, it is important we generate a new instances on each request. We do this by attaching the dataloader to the context object.

const server = new ApolloServer({

typeDefs,

resolvers,

context: () => {

return {

authorLoader: new DataLoader(async (keys) => {

// Fetch all authors with the given keys

const authors = await Author.find({ _id: { $in: keys } })

// Map the authors back to the keys

return keys.map((key) => {

return authors.find((author) => author.id === key)

})

}),

}

},

})

The context callback is fired for each request, meaning we get a new dataloader for each request. This means each client gets their own cache and we avoid data leakage.

If you were to just import and use a dataloader instance, you would be sharing the cache between all clients. This would mean that one client could see the data of another client. Very bad!

Conclusion

The N+1 problem is a common performance issue when building GraphQL APIs. By using dataloaders, you can batch and cache queries to your database, reducing the number of round trips and improving the performance of your application.